Advertisement

Microsoft unveils first in-house AI and cloud chips in trend towards custom silicon

- The Maia 100 chip will provide Microsoft Azure cloud customers with a new way to develop and run AI programs that generate content

- Making its own chips could insulate Microsoft from becoming too dependent on any one supplier, a vulnerability underscored by a scramble for Nvidia’s AI chips

Reading Time:3 minutes

Why you can trust SCMP

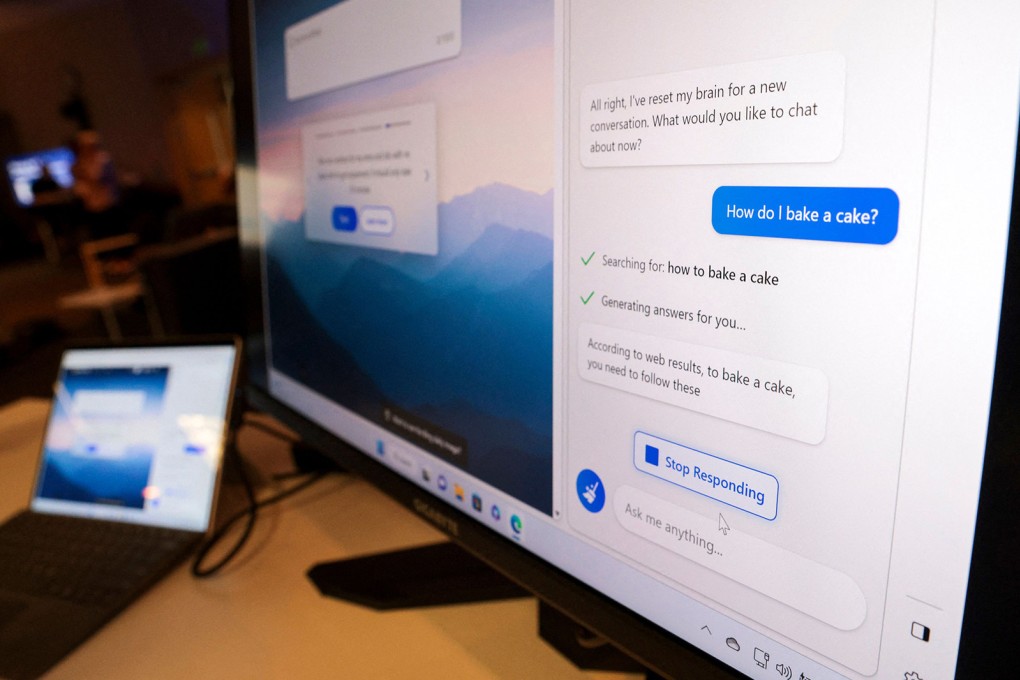

Microsoft unveiled its first home-grown artificial intelligence chip and cloud-computing processor in an attempt to take more control of its technology and ramp up its offerings in the increasingly competitive market for AI computing. The company also announced new software that lets clients design their own AI assistants.

The Maia 100 chip, announced at the company’s annual Ignite conference in Seattle on Wednesday, will provide Microsoft Azure cloud customers with a new way to develop and run AI programs that generate content. Microsoft is already testing the chip with its Bing and Office AI products, said Rani Borkar, a vice-president who oversees Azure’s chip unit. Microsoft’s main AI partner, ChatGPT maker OpenAI, is also testing the processor. Both Maia and the server chip, Cobalt, will debut in some Microsoft data centres early next year.

“Our goal is to ensure that the ultimate efficiency, performance and scale is something that we can bring to you from us and our partners,” Microsoft chief executive officer Satya Nadella said at the conference. Maia will power Microsoft’s own AI apps first and then be available to partners and customers, he added.

Microsoft’s multi-year investment shows how critical chips have become to gaining an edge in both AI and the cloud. Making them in-house lets companies wring performance and price benefits from the hardware. The initiative also could insulate Microsoft from becoming overly dependent on any one supplier, a vulnerability currently underscored by the industrywide scramble for Nvidia’s AI chips. Microsoft’s push into processors follows similar moves by cloud rivals. Amazon.com acquired a chip maker in 2015 and sells services built on several kinds of cloud and AI chips. Google began letting customers use its AI accelerator processors in 2018.

Advertisement

For a company of Microsoft’s scale, “it’s important to optimise and integrate” every element of its hardware to provide the best performance and avoid supply-chain bottlenecks, Borkar said in an interview. “And really, at the end of the day, to give customers the infrastructure choice.”

Microsoft will also sell customers services based on Nvidia’s latest H200 chip and Advanced Micro Devices’s MI300X processor, both intended for AI tasks, some time next year. Still, the industry seems to be embarking on a lasting shift towards in-house chips. The transition is particularly bad news for Intel, whose own AI chip efforts are running behind. Meanwhile, with Cobalt, Microsoft is joining efforts by Amazon and AMD to grab share in the server chip market, which Intel currently dominates.

Advertisement

Maia is designed to help AI systems more quickly process the massive amount of data required to do such tasks as recognise speech and images. Azure Cobalt is a central processing unit that will come with 128 computing cores – or mini processors – putting it in the same league as products from Intel and AMD. The more cores the better because they can quickly divide work into small tasks and do them all at once. Cobalt also uses Arm Holdings designs, which proponents say are inherently more efficient because they were developed from designs used in battery-powered devices like smartphones. Both chips will be manufactured by Taiwan Semiconductor Manufacturing Co.

Advertisement

Select Voice

Choose your listening speed

Get through articles 2x faster

1.25x

250 WPM

Slow

Average

Fast

1.25x