Emerging US-China AI arms race undermines their leadership in global standards

- Both recognise the revolutionary potential of AI and want to lead the global AI debate but are unwilling to engage directly

- But with both also intent on integrating AI into their respective militaries, the task of bringing the international community together might have to rest with others

Other provisions in the executive order include establishing safety standards to be followed before an AI system can be publicly released, and the labelling of AI-generated content. Exactly how such standards would be implemented by the US, in a country that prides itself on allowing its technology ecosystem the creative freedom to innovate, remains to be seen.

Given the political influence that tech companies in the US possess, it’s likely that these standards would prove merely symbolic. Still, the intent is clear; the US wants to be at the forefront of the global AI debate.

Other major AI initiatives were also announced by US Vice-President Kamala Harris on November 1. Of particular importance was the endorsement of its “Political Declaration on the Responsible Military Use of AI and Autonomy” by 31 countries. The declaration, made in February, aims to “build international consensus around responsible behavior and guide states’ development, deployment, and use of military AI”.

Both Biden’s executive order and Harris’ announcement also emphasised the importance of working with other states to build trustworthy AI. Biden’s order vowed to ensure AI is “interoperable” with its international partners, and to work with its “allies and partners” to build a “strong international framework to govern the development and use of AI”.

China’s endorsement of the declaration is interesting. Speaking on the matter, China’s Vice-Minister of Science and Technology Wu Zhaohui called for “global collaboration to share knowledge and make AI technologies available to the public under open source terms”.

Biden’s executive order can be seen as a direct response to China’s AI ambitions. Both states recognise the potential for AI to be the most revolutionary technology in human history, and as such, want to lead the global AI debate.

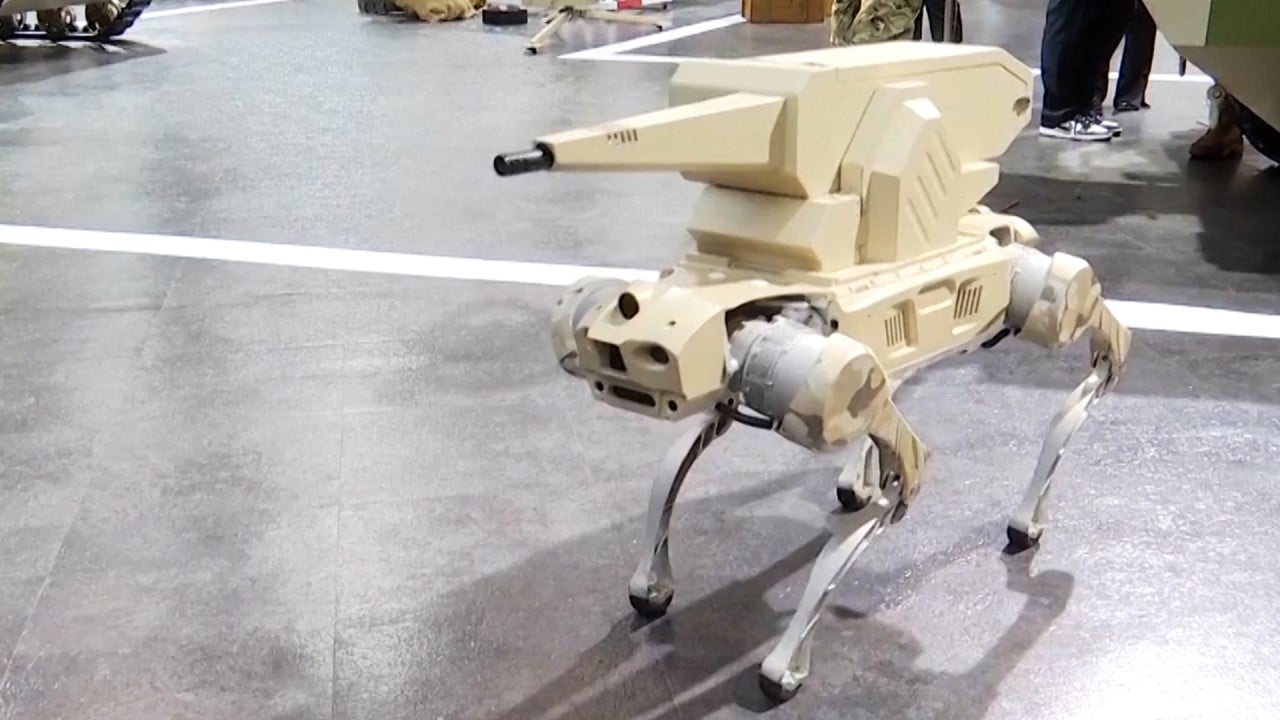

Last week, the first UN resolution on lethal autonomous weapons systems was adopted, with the vote being passed 164-5, with eight abstentions. The resolution stressed the urgent need to address the concerns raised by lethal autonomous weapons systems, including “the risk of an emerging arms race” and “lowering the threshold for conflict and proliferation”.

While this is a positive first step, the resolution needs to be backed up by further action. The unfortunate reality is that, with major states investing heavily in the military applications of AI, it might take a major catastrophe before they properly understand the serious risks associated with AI.

Shayan Hassan Jamy is a research officer at the Strategic Vision Institute (SVI), Islamabad

.jpg?itok=v2VOqAzL&v=1691661458)