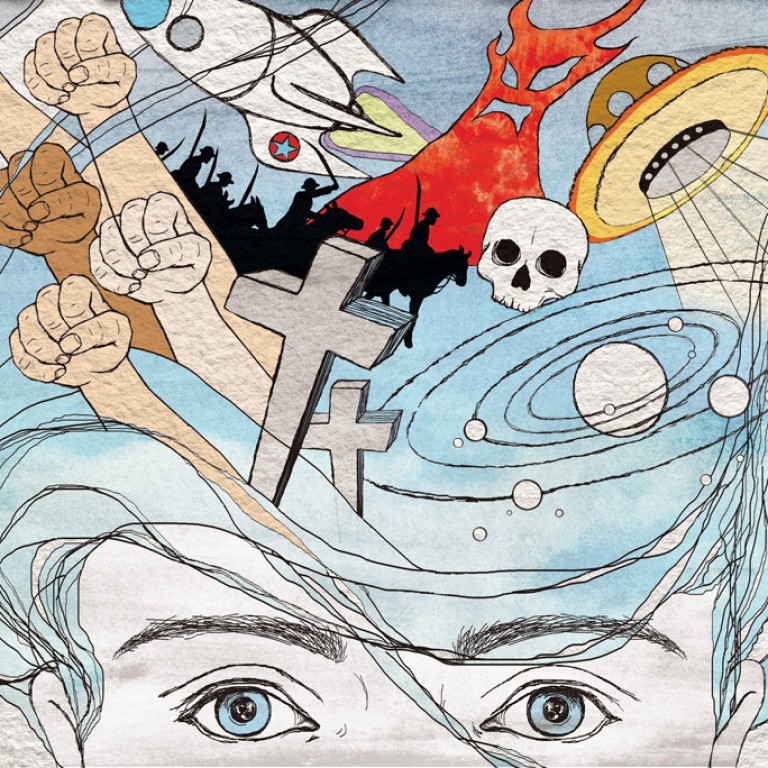

Beliefs – why do we have them and how did we get them?

Believe it or not, many of the most important things we hold to be true are not the product of fact and reason. Graham Lawton explains.

The day I sat down to write this article the news was rather like any other day. A teenager had been found guilty of plotting to behead a British soldier. Fighting had broken out again in Ukraine. Greece was accusing its creditors of being motivated by ideology rather than economic reality. Some English football fans were filmed racially abusing a man on the Paris subway. In post-Occupy Hong Kong, a clash over “artistic differences” within the Hong Kong Chinese Orchestra was turning nasty. Admittedly, all of that day’s stories were unique in themselves. But at the root they were all about the same thing: the powerful and very human attribute we call belief.

Beliefs define how we see the world and act within it; without them, there would be no plots to behead soldiers, no war, no economic crises, no racism and no showdowns between musicians. There would also be no cathedrals, no nature reserves, no science and no art. Whatever beliefs you hold, it’s hard to imagine life without them. Beliefs, more than anything else, are what make us human. They also come so naturally that we rarely stop to think how bizarre belief is.

In 1921, philosopher Bertrand Russell put it succinctly when he described belief as “the central problem in the analysis of mind”.

Believing, he said, was “the most ‘mental’ thing we do” – by which he meant the most removed from the “mere matter” that our brains are made of. How can a physical object like a human brain believe things? Philosophy has made little progress on Russell’s central problem.

But increasingly, scientists are stepping in.

“We once thought that human beliefs were too complex to be amenable to science,” says Frank Krueger, a neuroscientist at George Mason University, in Virginia, the United States. “But that era has passed.”

What is emerging is a picture of belief that is quite different from common-sense assumptions of it – one that has the potential to change some widely held beliefs about ourselves. Beliefs are fundamental to our lives, but when it comes to what we believe and why, it turns out we have a lot less control than you might think.

Our beliefs come in many shapes and sizes, from the trivial and the easily verified – I believe it will rain today – to profound leaps of faith – I believe in God. Taken together they form a personal guidebook to reality, telling us not just what is factually correct but also what is right and good, and hence how to behave towards one another and the natural world. This makes them arguably not just the most mental thing our brains do but also the most important.

“The prime directive of the brain is to extract meaning. Everything else is a slave system,” says psychologist Peter Halligan, at Cardiff University, in Britain. Yet, despite their importance, one of the long-standing problems with studying beliefs is identifying exactly what it is you are trying to understand.

“Everyone knows what belief is until you ask them to define it,” says Halligan.

What is generally agreed is that belief is a bit like knowledge, but more personal.

Knowing something is true is different from believing it to be true; knowledge is objective, but belief is subjective. It is this leap-of-faith aspect that gives belief its singular, and troublesome, character.

Philosophers have long argued about the relationship between knowing and believing. In the 17th century, René Descartes and Baruch Spinoza clashed over this issue while trying to explain how we arrive at our beliefs.

Descartes thought understanding must come first; only once you have understood something can you weigh it up and decide whether to believe it or not.

Spinoza didn’t agree. He claimed that to know something is to automatically believe it; only once you have believed something can you un-believe it. The difference may seem trivial but it has major implications for how belief works.

If you were designing a belief-acquisition system from scratch it would probably look like the Cartesian one. Spinoza’s view, on the other hand, seems implausible.

If the default state of the human brain is to unthinkingly accept what we learn as true, then our common-sense understanding of beliefs as something we reason our way to goes out of the window. Yet, strangely, the evidence seems to support Spinoza. For example, young children are extremely credulous, suggesting that the ability to doubt and reject requires more mental resources than acceptance.

Similarly, fatigued or distracted people are more susceptible to persuasion. And when neuroscientists joined the party, their findings added weight to Spinoza’s view.

THE NEUROSCIENTIFIC investigation of belief began in 2008, when Sam Harris, at the University of California, Los Angeles, put people into a brain scanner and asked them whether they believed in various written statements. Some were simple factual propositions, such as “California is larger than Rhode Island”; others were matters of personal belief, such as “There is probably no God”. Harris found that statements people believed to be true produced little characteristic brain activity – just a few brief flickers in regions associated with reasoning and emotional reward. In contrast, disbelief produced longer and stronger activation in regions associated with deliberation and decision-making, as if the brain had to work harder to reach a state of disbelief. Statements the volunteers did not believe also activated regions associated with emotion, but in this case pain and disgust.

Harris’ results were widely interpreted as further confirmation that the default state of the human brain is to accept. Belief comes easily; doubt takes effort. While this doesn’t seem like a smart strategy for navigating the world, it makes sense in the light of evolution. If the sophisticated cognitive systems that underpin belief evolved from more primitive perceptual ones, they would retain many of the basic features of these simpler systems. One of these is the uncritical acceptance of incoming information. This is a good rule when it comes to sensory perception as our senses usually provide reliable information. But it has saddled us with a non-optimal system for assessing more abstract stimuli such as ideas.

Further evidence that this is the case has come from studying how and why belief goes wrong.

“When you consider brain damage or psychiatric disorders that produce delusions, you can begin to understand where belief starts,” says Halligan.

Such delusions include beliefs that seem bizarre to outsiders but completely natural to the person concerned. For example, people sometimes believe that they are dead, that loved ones have been replaced by impostors, or that their thoughts and actions are being controlled by aliens. And, tellingly, such delusions are often accompanied by disorders of perception, emotional processing or “internal monitoring” – knowing, for example, whether you initiated a specific thought or action.

These deficits are where the delusions start, suggests Robyn Langdon, associate professor at the department of cognitive science at Macquarie University, in Sydney, Australia. People with delusions of alien control, for example, often have faulty motor monitoring, so fail to register actions they have initiated as their own. Likewise, people with the delusion known as “mirror-self misidentification” fail to recognise their own reflection. They often also have a sensory deficit called mirror agnosia: they don’t “get” reflective surfaces. A mirror looks like a window and if asked to retrieve an object reflected in one they will try to reach into the mirror or around it. Their senses are telling them that the person in the mirror isn’t them, and so they believe this to be true. Again, we trust the evidence of our senses, and if they tell us that black is white, we generally do well to believe them.

You may think that you would never be taken in like that but, says Langdon, “we all default to such believing, at least initially”.

Consider the experience of watching a magic show. Even though you know it’s all an illusion, your instinctive reaction is that the magician has altered the laws of physics.

Misperceptions are not delusions, of course. Witnessing someone being sawn in half and put back together doesn’t mean we then believe that people can be safely sawn in half. What’s more, sensory deficits do not always lead to delusional beliefs. So what else is required? Harris’ brain-imaging studies delivered an important clue: belief involves both reasoning and emotion.

THE FORMATION OF DELUSIONAL belief probably also requires the emotional weighing-up process to be disrupted in some way. It may be that brain injury destroys it altogether, causing people to simply accept the evidence of their senses. Or perhaps it just weakens it, lowering the evidence threshold required to accept a delusional belief.

For example, somebody with a brain injury that disrupts their emotional processing of faces may think “the person who came to see me yesterday looked like my wife, but didn’t feel like her, maybe it was an impostor. I will reserve judgment until she comes back”. The next meeting feels similarly disconnected and so the hypothesis is confirmed and the delusion starts to grow.

According to Langdon, this is similar to what goes on in the normal process of belief formation. Both involve incoming information together with unconscious reflection on that information until a “feeling of rightness” arrives, and a belief is formed.

This two-stage process could help explain why people without brain damage are also surprisingly susceptible to strange beliefs. Our natural credulity is one thing, and is particularly likely to lead us astray when we are faced with claims based on ideas that are difficult to verify with our senses – “9/11 was an inside job”, for example. The second problem is with the “feeling of rightness”, which would appear to be extremely fallible.

So where does the feeling of rightness come from? The evidence suggests it has three main sources – our evolved psychology, personal biological differences and the society we keep.

The importance of evolved psychology is illuminated by perhaps the most important belief system of all: religion. Although the specifics vary widely, religious belief per se is remarkably similar across the board. Most religions feature a familiar cast of characters: supernatural agents, life after death, moral directives and answers to existential questions. Why do so many people believe such things so effortlessly? According to the cognitive by-product theory of religion, their intuitive rightness springs from basic features of human cognition that evolved for other reasons.

In particular, we tend to assume that agents cause events. A rustle in the undergrowth could be a predator or it could just be the wind, but it pays to err on the side of caution; our ancestors who assumed agency would have survived longer and had more offspring. Likewise, our psychology has evolved to seek out patterns because this was a useful survival strategy.

During the dry season, for example, animals are likely to congregate by a waterhole, so that’s where you should go hunting. Again, it pays for this system to be overactive.

This potent combination of hypersensitive “agenticity” and “patternicity” has produced a human brain that is primed to see agency and purpose everywhere. And agency and purpose are two of religion’s most important features – particularly the idea of an omnipotent but invisible agent that makes things happen and gives meaning to otherwise random events. In this way, humans are naturally receptive to religious claims, and when we first encounter them – typically as children – we unquestioningly accept them. There is a “feeling of rightness” about them that originates deep in our cognitive architecture.

According to Krueger, all beliefs are acquired in a similar way: “Beliefs are on a spectrum but they all have the same quality. A belief is a belief.”

Our agent-seeking and pattern-seeking brain usually serves us well, but it also makes us susceptible to a wide range of weird and irrational beliefs, from the paranormal and supernatural to conspiracy theories, superstitions, extremism and magical thinking. And our evolved psychology underpins other beliefs, too, including dualism – viewing the mind and body as separate entities – and a natural tendency to believe that the group we belong to is superior to others.

A second source of rightness is more personal. When it comes to something like political belief, the assumption has been that we reason our way to a particular stance. But, over the past decade or so, it has become clear that political belief is rooted in our basic biology. Conservatives, for example, generally react more fearfully than liberals to threatening images, scoring higher on measures of arousal such as skin conductance and eye-blink rate. This suggests they perceive the world as a more dangerous place and perhaps goes some way to explaining their stance on issues like law and order and national security.

Another biological reflex that has been implicated in political belief is disgust. As a general rule, conservatives are more easily disgusted by stimuli such as fart smells and rubbish. And disgust tends to make people of all political persuasions more averse to morally suspect behaviour, although the response is stronger in conservatives. This has been proposed as an explanation for differences of opinion over important issues such as gay marriage and illegal immigration. Conservatives often feel strong revulsion at these violations of the status quo and so judge them to be morally unacceptable. Liberals are less easily disgusted and less likely to judge them so harshly.

These instinctive responses are so influential that people with different political beliefs literally come to inhabit different realities. Many studies have found that people’s beliefs about controversial issues align with their moral position on it. Supporters of capital punishment, for example, often claim that it deters crime and rarely leads to the execution of innocent people; opponents say the opposite. That might simply be because we reason our way to our moral positions, weighing up the facts at our disposal before reaching a conclusion. But there is a large and growing body of evidence to suggest that belief works the other way. First we stake out our moral positions, and then we mould the facts to fit.

So if our moral positions guide our factual beliefs, where do morals come from? The short answer: not your brain.

According to Jonathan Haidt, professor of ethical leadership at New York University’s Stern School of Business, our moral judgments are usually rapid and intuitive; people jump to conclusions and only later come up with reasons to justify their decision. To see this in action, try confronting someone with a situation that is offensive but harmless, such as using their national flag to clean a toilet. Most will insist this is wrong but fail to come up with a rationale, and fall back on statements such as “I can’t explain it, I just know it’s wrong”.

This becomes clear when you ask people questions that include both a moral and factual element, such as: “Is forceful interrogation of terrorist suspects morally wrong, even when it produces useful information?” or “Is distributing condoms as part of a sex-education programme morally wrong, even when it reduces rates of teenage pregnancy and STDs?” People who answer “yes” to such questions are also likely to dispute the facts, or produce their own alternative facts to support their belief.

Opponents of condom distribution, for example, often state that condoms don’t work so distributing them won’t do any good anyway.

What feels right to believe is also powerfully shaped by the culture in which we grow up. Many of our fundamental beliefs are formed during childhood. According to Krueger, the process begins as soon as we are born, based initially on sensory perception – that objects fall downwards, for example – and later expands to more abstract ideas and propositions.

Not surprisingly, the outcome depends on the beliefs you encounter.

“We are social beings. Beliefs are learned from the people you are closest to,” says Krueger. It couldn’t be any other way. If we all had to construct a belief system from scratch based on direct experience, we wouldn’t get very far.

This isn’t simply about proximity; it is also about belonging. Our social nature means we adopt beliefs as badges of cultural identity. This is often seen with hot-potato issues, where belonging to the right tribe can be more important than being on the right side of the evidence. Acceptance of climate change, for example, has become a shibboleth in the US – conservatives on one side, liberals on the other. Evolution, vaccination and others are similarly divisive issues.

So, what we come to believe is shaped to a large extent by our culture, biology and psychology. By the time we reach adulthood, we tend to have a relatively coherent and resilient set of beliefs that stay with us for the rest of our lives. These form an interconnected belief system with a relatively high level of internal consistency. But the idea that this is the product of rational, conscious choices is highly debatable.

“If I’m totally honest, I didn’t really choose my beliefs: I discover I have them,” says Halligan. “I sometimes reflect upon them, but I struggle to look back and say, ‘What was the genesis of this belief?’”

THE UPSHOT OF ALL this is that our personal guidebook of beliefs is both built on sand and also highly resistant to change.

“If you hear a new thing, you try to fit it in with your current beliefs,” says Halligan.

That often means going to great lengths to reject something that contradicts your position, or seeking out further information to confirm what you already believe.

That’s not to say that people’s beliefs cannot change. Presented with enough contradictory information, we can and do change our minds.

Many atheists, for example, have reasoned their way to irreligion. Often, though, rationality doesn’t even triumph here. Instead, we are more likely to change our beliefs in response to a compelling moral argument – and when we do, we reshape the facts to fit with our new belief. More often than not, though, we simply cling to our beliefs.

All told, the uncomfortable conclusion is that some if not all of our fundamental beliefs about the world are based not on facts and reason – or even misinformation – but on gut feelings that arise from our evolved psychology, basic biology and culture. The results of this are plain to see: political deadlock, religious strife, evidence-free policymaking and a bottomless pit of mumbo jumbo. Even worse, the deep roots of our troubles are largely invisible to us.

“If you hold a belief, by definition you hold it to be true,” says Halligan. “Can you step outside your beliefs? I’m not sure you’d be capable.”

The world would be a boring place if we all believed the same things.

But it would surely be a better one if we all stopped believing in our beliefs quite so strongly.

New Scientist

What’s your delusion?

Normal people believe in the strangest things. About half of American adults endorse at least one conspiracy theory, belief in paranormal or supernatural phenomena is widespread, and superstition and magical thinking are nearly universal.

Surprisingly large numbers of people also hold beliefs that a psychiatrist would class as delusional. In 2011, psychologist Peter Halligan, at Cardiff University, in Wales, assessed how common such beliefs were in Britain (see below). He found that more than 90 per cent of people held at least one, to some extent. They included the belief that a celebrity is secretly in love with you; that you are not in control of some of your actions; and that people say or do things that contain special messages for you. None of Halligan's subjects were troubled by their strange beliefs. Nonetheless, the fact they are so common suggests the "feeling of rightness" that accompanies belief is not always a reliable guide to reality.

1) Your body, or part of your body, is misshapen or ugly - 46.4 per cent

2) Certain people are out to harm or discredit you - 33.8 per cent

3) You are an exceptionally gifted person who others do not recognise - 40.5 per cent

4) There is another person who looks and acts like you - 32.7 per cent

5) You are not in control of some of your actions - 44.3 per cent

6) Your thoughts are not fully under your control - 33.6 per cent

7) Certain places are duplicated, i.e. are in two locations at the same time - 38.7 per cent

8) Some people are duplicated, i.e. are in two places at the same time - 26.2 per cent

9) People say or do things that contain special messages for you - 38.5 per cent

10) People you know disguise themselves as others to manipulate or influence you - 24.9 per cent