A short pause in development of powerful AI can ensure proper data and privacy controls in the long run

- Time is needed to develop a shared safety protocol to manage the massive data used in AI training, often pulled from the internet and which may include user conversations

- Tech companies – and AI developers in particular – have a duty to review and critically assess the implications of their AI systems on data privacy and ethics, and ensure guidelines are adhered to

The potential of generative AI to transform many industries by increasing efficiency and uncovering novel insights is immense. Several tech giants have already started to explore how to implement generative AI models in their productivity software.

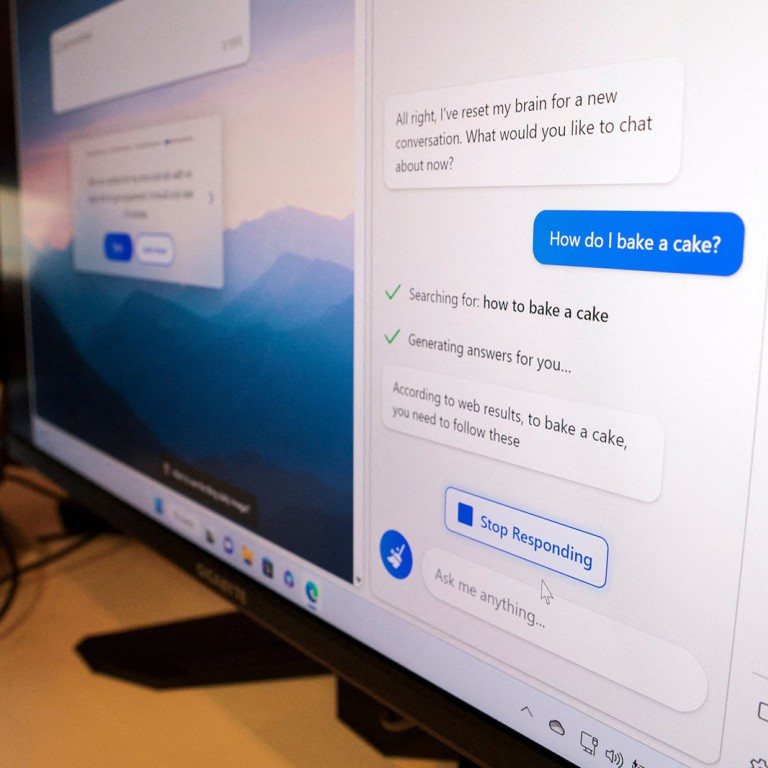

In particular, general-knowledge AI chatbots based on large language models, like ChatGPT, can help draft documents, create personalised content, respond to employee inquiries and more. One of the world’s biggest investment banks has reportedly “fine-tune trained” a generative AI model to answer questions from its financial advisers on its investment recommendations.

The operation of generative AI depends heavily on deep learning technology, which involves analysis of a massive amount of unstructured data, often carried out with little supervision. Training data is reportedly collected and copied from the internet and may include anything from sensitive personal information to trivia.

Many AI developers are keen to keep their data sets proprietary and tend to disclose as little detail as possible on their data collection processes. So there is also a risk that personal data may not be collected fairly or on an informed basis, posing privacy risks.

And, since user conversations could be used as new training data for AI models, users may be inadvertently feeding sensitive information to different AI systems. This makes the personal data they input susceptible to misuse and raises questions about whether the “limitation of use” principle is adhered to.

Broader questions on how data subjects can enforce their access and correction rights, how children’s privacy should be safeguarded and how data security risks could be minimised in the development and use of generative AI should also be addressed.

To further complicate the picture, the ethical risks of inaccurate information, discriminatory content or biased output in the use of generative AI cannot be overlooked. To quote the well-recognised Asilomar AI Principles: “Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.”

Is this the juncture for such planning and management and, more importantly, control? Indeed, it is noteworthy that the open letter does not call for a pause on AI development in general, but “merely a stepping back from the dangerous race to ever-larger unpredictable black-box models with emergent capabilities”.

While the open letter advocates a long pause on advanced AI starting this summer, tech companies – and AI developers in particular – have a duty to review and critically assess the implications of their AI systems on data privacy and ethics, and ensure that laws and guidelines are adhered to.

How Hong Kong can play a role in preventing AI Armageddon

The guidance recommends internationally recognised ethical AI principles covering accountability, human oversight, transparency and interpretability, fairness, data privacy, and beneficial AI, as well as reliability, robustness and security. These are all principles that organisations should observe during the development and use of generative AI, to mitigate risks.

AI developers are often in the best position to adopt a privacy-by-design approach, which can mitigate the possible privacy risks for users. For instance, the adoption of techniques such as anonymisation can ensure that all identifiers of the data subjects have been removed from the training data. A fair and transparent data-collection policy would also foster trust between the AI systems and their users.

Generative AI presents an exciting yet complicated landscape with opportunities waiting to unfold. Its rapid ubiquity should be a signal for all stakeholders, in particular tech companies and AI developers, to join hands in creating a safe and healthy ecosystem to ensure this transformative technology is used for our good.

Ada Chung Lai-ling is Hong Kong’s privacy commissioner for personal data